Every day, developers complain that AI assistants write terrible code, miss obvious bugs, or spiral into endless loops. Meanwhile, I'm shipping production features faster than ever with the same tools. It took me months to realize that working with AI isn't about better prompts—it's about applying the same collaboration skills I use in pair programming.

Today I’d like to share 10 AI-driven development heuristics that I’ve been using over the last few months to build real world production grade software that real users are using today.

Before we get into it, let me provide some definitions, disclaimers, tools, and context.

Definition

Heuristic — practical approach to solving a problem when finding the perfect solution would be too slow, complex, or impossible

Disclaimers

I am using "heuristics" loosely here to cover rules of thumb, workflows, and practices

You may be using different coding agents, so some of this may or may not apply to you. I’ve tried to keep this general, but I’m just sharing.

Tools

- Claude Code (I will refer to this agent as Claude, it, or bot)

- Webstorm (my IDE of choice)

- Git (frequent commits, because bots can mess things up quickly)

Context

I gathered all of these heuristics while delivering user stories for an actively used product. These are not necessarily the same heuristics I use when vibe coding on a personal project.

10 heuristics

1. Stop the bot when it starts to wander and gets lost

After prompting Claude to introduce a small increment of functionality, I continue to pay attention. I read almost everything it’s doing, thinking, planning, and pondering. Instead of treating Claude like a worker bee, I treat it as my pair. We’re collaborating to reach an optimal solution that works and is easy to extend.

Over the course of this reading, I sometimes don’t agree with specific approaches Claude decides to take. I press the esc key and inspect even further. Pressing the esc stops the bot. This gives me time to read the same files Claude is reading and determine what I think the best approach is. Sometimes I realize that it’s taking the right approach and tell it to continue. Claude will pick up right where it left off as if I never stopped it at all.

Other times, I see a better way of approaching the problem or the root cause of the error. Instead of just telling Claude to continue, I will relay my findings to it. This usually results in Claude’s favorite saying, “You’re absolutely right!”—and it reevaluates its task list and continues with the new information in mind.

Instead of waiting for Claude to finish the whole solution, I actively review its thought process. This is a habit I’ve picked up from many years of pair programming. I always want my pair and me to be on the same page and well aligned with the path we’re taking. While pairing, it’s common to stop and talk about what’s happening, where we’re going, and if that’s still a sensible direction to go. These constant check-ins save a lot of time and are part of the work. This is collaborative engineering. I’ve brought this collaborative spirit to my interactions with Claude and found it to be incredibly rewarding

2. Find the issue myself

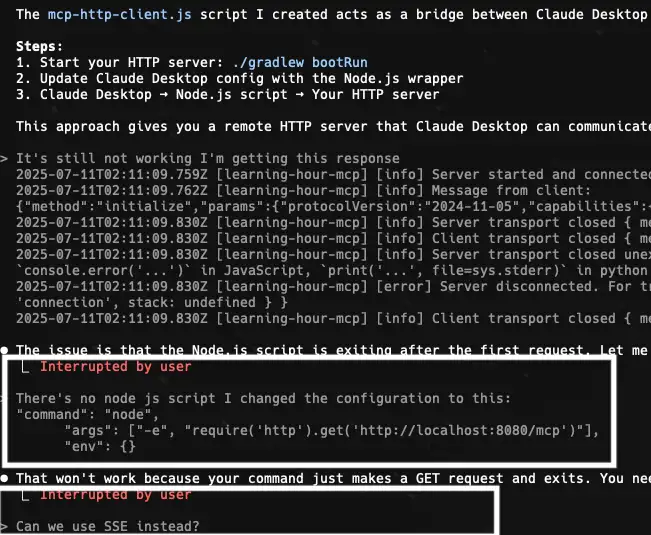

One of the biggest arguments against using coding agents is that they get stuck in loops when trying to debug and investigate tricky bugs and errors. I’ve experienced this myself, but it’s not just Claude solving the issue—it needs my input and assistance too! I will give Claude 2–3 chances to find the root cause and resolve the issue before stopping it and investigating myself. This happened just the other day.

Claude and I were adapting an LLM test to use Test Containers instead of a locally running MySQL instance. It seemed like everything was set up correctly, but when adding the next test that would trigger reading the test data, the tool call of the Chat Assistant failed. So I put told Claude to fix it and make the test pass. It added tons of logs and tried an array of different things, but it couldn’t tell me why the test was failing. After a few rounds of this, I stopped Claude and investigated the issue myself.

I set up breakpoints in WebStorm and finally got to the root cause of the problem. Any reads that the function calls made were not targeted at the correct MySQL instance. The app was set up in a way that on startup the database connection was initialized. When running these tests, we wanted to use only the Test Containers database. Now that I knew exactly what the issue was, I relayed it to Claude, and it pursued several different solutions.

Unfortunately, I had to bail on the Test Containers approach because of how this monolith was structured, and we could not justify refactoring it to make it easier to substitute databases on startup. However, the point here is just telling Claude to fix the issue is not enough all the time. For more complex issues, a human getting involved and detecting the root cause is less expensive both in time and token costs. Most times, once Claude understands the issue, it’s able to engineer a working solution.

3. Extended Thinking Modes

This mode costs more, so I use it pragmatically. Here are a few situations I will trigger the thinking modes:

After Claude has failed a task once, and it seems to be getting confused...

this usually gets it back on task and either leads to a solution or helps me to discover the issue for Claude

On an initial prompt that seems very complex and I want Claude to use as many of its resources

Before embarking on a complex task, I may prompt Claude to think and plan and discuss different options

I usually start with think hard and go to the higher thinking modes on later prompts if needed. Like humans, coding agents perform better when they track their thought process. Thinking aloud is a crucial part of pairing, so a mode like this makes it even easier to pair with Claude.

All you need to do is say the 'magic' word somewhere in your prompt. "Each level allocates progressively more thinking budget for Claude to use." — Claude Code Best Practices

The modes are:

- think

- think hard

- think harder

- ultrathink

4. Ask me questions

Sometimes, at the end of an initial prompt I will tell Claude to ask me any clarifying questions if needed. The phrasing here is important. If you tell Claude to ask you questions, it will do so, and they may be silly and unnecessary questions that it could explore on its own using its many tools.

Some reasons why I tell Claude to ask questions are:

- to give it any missing information it needs to solve the problem

- to explore different solutions

- to gauge whether Claude understands the task at hand

It can be tedious to answer all the questions. I usually skip questions that are not relevant, but it can help get it and I aligned on the task.

5. Claude the student

Coding agents can do some amazing things for professional programmers, but they need to be mentored along the way. I continually educate Claude on how to iterate towards maintainable software. We debate each other on Object-Oriented principles, DDD, refactoring, and iterative development. Coding agents are willing pairs that will conform to your style, but you must be patient and repetitive. I store learnings in a directory of .md files that I have a custom command for. After a session and before clearing or compacting, I can export all of my teachings of how I approach programming to an .md file that Claude can reference in a future session. This way the learning doesn’t get cleared out, and Claude can continue to grow as we pair together.

6. Working → Maintainable

After the code works, I don’t stop there. I want to improve the design. Software design is all about making the next change easy. I may ask Claude to review its own code or suggest some refactorings that will improve the design.

I’ve heard some people say that in the future the code won’t even matter anymore. We will just be prompting and communicating with an agent—the code will never be looked at so who cares if it’s not readable. Maybe that will be the reality one day as coding agents improve more and more, but today code reviews are still a reality. Humans still need to be able to understand the code you’ve written no matter if it was generated by a bot or not.

In this AI coding world, refactoring remains a huge part of my development process.

As software products evolve and grow, continual change must be supported or else features that used to take a few days now take a few weeks. Estimations become impossible and even the coding agents can’t add new features because no one bothered to care for the software design.

I iterate on Claude’s code and turn it into something I’m proud to ship to production.

7. Commit before prompting

Working with Claude has made me even more disciplined when it comes to committing to version control frequently. I never know what crazy ideas Claude is going to spout out so I want to be in the position that I can revert its changes and start over again.

My workflow:

- prompt until the code works

- commit

- refactor with Claude or myself

- commit

- push remotely

8. Make Claude experience the failure

I used to copy and paste errors into Claude. Honestly, I still do sometimes, but I try to have Claude run the app or the tests or whatever it needs to see the issues first hand. This process puts Claude into a loop where it can try something and get feedback.

9. Is there a simpler way to do this?

This is one of my favorite followups to Claude’s code generation. It should be specific, but if the task is small I will ask generally, “Is there a simpler way to do this?” Yes is usually the answer and Claude tries to simplify the approach.

Most of the time, I see a better way and want to challenge Claude to simplify the approach. Other times I don’t necessarily see a simple way or even understand the code, but I just ask it because it generally produces great results.

10. Work from a task list

All of my career I’ve had a habit of working from a task list. I make a Markdown file and organize my thoughts with my pair. This habit hasn’t changed working with Claude and has been even more valuable.

I have a custom slash command that instructs Claude to go through my todo list and work on the highest priority item.

Benefits of using a task list:

- Reduces cognitive load

- Focus on one thing at a time

- Reduce the risk of forgetting things you wanted to do

Working with AI is like learning to pair program all over again.

When I first started pair programming, I had to learn a whole new way of working—communicating constantly, staying aligned, and checking in with my pair. It was uncomfortable at first, but it made me a better developer.

Working with Claude feels remarkably similar. These 10 heuristics aren't just tips and tricks—they're the foundation of building a sustainable relationship with AI that actually makes you a better programmer.

Don't treat AI like a magic wand—treat it like your most eager junior developer. One who's incredibly capable but needs guidance, mentoring, and clear direction. One who benefits from your experience but also challenges you to think differently.

The future belongs to developers who can effectively collaborate with AI while maintaining the craftsmanship that makes software maintainable and elegant. These heuristics will help you get there.

Want to consistently ship high-quality code that's easy to change and understand? Subscribe to Refactor to Grow for bi-weekly insights delivered to your inbox.